Building A Flashcards App

There are a bunch of ways by which one can make learning a new language easy. One of the ways that I personally like is learning through Flash Cards. Making use of Gemini API to generate flash cards in less than a minute sounds like a dream and I wish to do exactly that.

The Idea

The existence of multiple language learning apps or Flashcard apps is nothing new, but none of the apps give me the option to choose the vocabulary that I wish to practice. I find a technical document with lot of new words that I wish to learn, or I find a funny YouTube video where people converse with uncommon words that I wish to understand, the existing apps do not give me that choice. With Gemini API, I now have the option to upload a document or video or copy-paste a YouTube URL and generate flashcards in less than a minute. This freedom of learning what I actually want to learn is made possible because of Gemini API.

Approach

The project was done as part of Google Gemini workshop. One of the criteria was to implement a minimum of 3 of the following Gemini's Gen AI capabilities, I have used the highlighted ones in this project.

Structured output/JSON mode/controlled generation

Few-shot prompting

Document understanding

Image understanding

Video understanding

Audio understanding

Function Calling

Agents

Long context window

Context caching

Gen AI evaluation

Grounding

Embeddings

Retrieval augmented generation (RAG)

Vector search/vector store/vector database

ML Ops (with Gen AI)

The user's preferred source and target language are sought as input to avoid ambiguity. Since the goal is to extract words and their meanings to display on the Card, we define the structure of the output first. For ease of understanding, I will try to use the example of learning German through English.

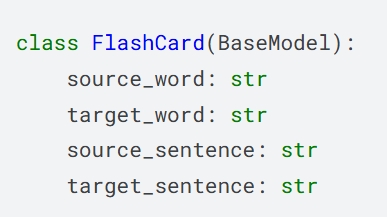

Defining the structure of the flash card would be the first step. This structured output allows the model to generate consistent output every time a request for Flashcard is made.

Having a complete initial list of words for the supported languages is the second step. The word lists in pdf are then used as an input for the API and Flashcards are generated for these words. The structure is then stored in an SQL database so that a set of Flashcards are always available for consumption without invoking the API to generate them. Function calls are defined to fetch the stored values.

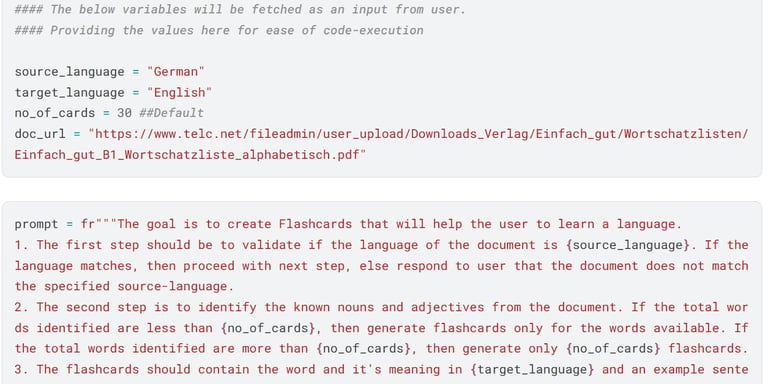

User inputs are fetched to determine the user's goal. Variables like source-language, target-language, number of flashcards are set even before the API call is made. This is to avoid ambiguity and prevent the model from assuming user's requirement. If the document or video matches the user's request, then the code proceeds with the flash-card generation, if not the API returns a message that the request does not match the input.

Coding Phases

Prompt Engineering

There are 2 API calls with prompts in this code. The first one is to process a document, and the second one is to process a video. The prompts change based on the input from the user and the prompt has to be structured in a manner to include the user-inputs.

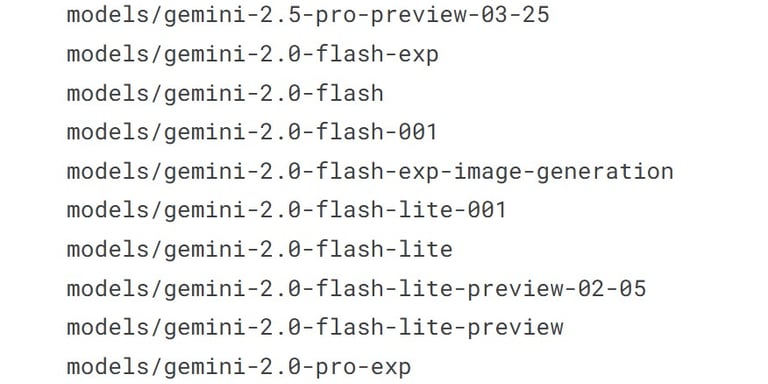

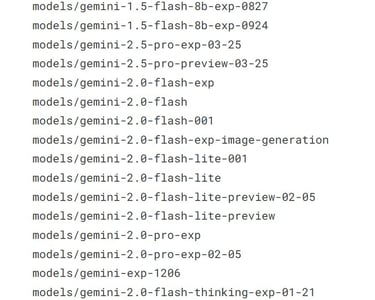

Deciding on the model

Though Google Gemini contains few models that can do everything, it still provides multiple models tailored to work well on a particular use-case. This project makes use of the super-efficient model gemini-2.0-flash for both document and video-processing.

Processing the output

The document processing and video processing might seem straight forward, but the tricky part is evaluating the input and output of the first API calls and channeling it further for other API calls or to other functions. Gemini also has this amazing capability to evaluate AI responses with AI and loop the feedback. This project however does not require this step. Also, I have not included an interact-with-human feature yet, which will down the lane increase the complexity of input and output evaluation.

Next Steps: work in progress ...

The flashcard generation using a structured output has been successful. The next step is to develop a GUI for web-app as well as a mobile app. The user will also be provided the ability to store their personal API to experience the joy of discovering Gemini for free.